Celebrated portrait photographers like Richard Avedon, Diane Arbus, and Martin Schoeller made their reputations with distinctive visual styles that sometimes required the careful control of lighting possible only in the studio.

Now MIT researchers, and their colleagues at Adobe Systems and the University of Virginia, have developed an algorithm that could allow you to transfer those distinctive styles to your own cellphone photos. They’ll present their findings in August at Siggraph, the premier graphics conference.

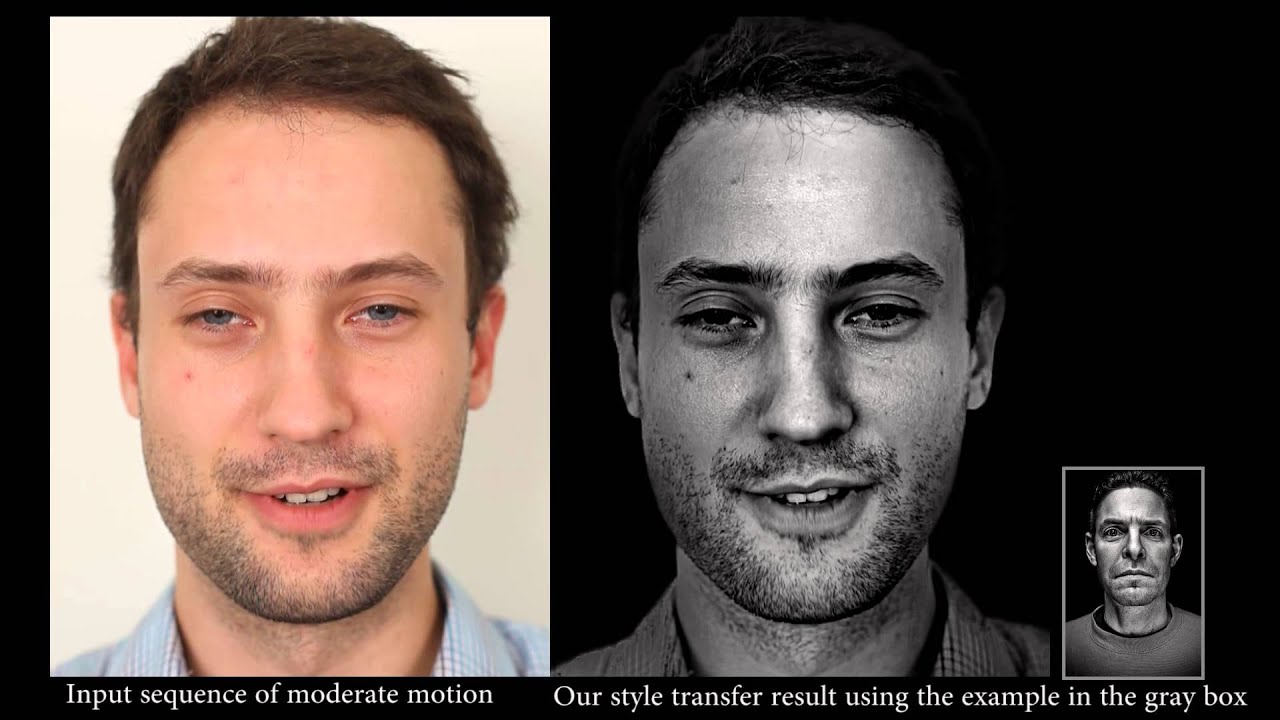

“Style transfer” is a thriving area of graphics research — and, with Instagram, the basis of at least one billion-dollar company. But standard style-transfer techniques tend not to work well with close-ups of faces, says YiChang Shih, an MIT graduate student in electrical engineering and computer science and lead author on the Siggraph paper.

“Most previous methods are global: From this example, you figure out some global parameters, like exposure, color shift, global contrast,” Shih says. “We started with those filters but just found that they didn’t work well with human faces. Our eyes are so sensitive to human faces. We’re just intolerant to any minor errors.”

So Shih and his coauthors — his joint thesis advisors, MIT professors of computer science and engineering Frédo Durand and William Freeman; Sylvain Paris, a former postdoc in Durand’s lab who’s now with Adobe; and Connelly Barnes of the University of Virginia — instead perform what Shih describes as a “local transfer.”

Acting locally

Using off-the-shelf face recognition software, they first identify a portrait, in the desired style, that has characteristics similar to those of the photo to be modified. “We then find a dense correspondence — like eyes to eyes, beard to beard, skin to skin — and do this local transfer,” Shih explains.

One consequence of local transfer, Shih says, is that the researchers’ technique works much better with video than its predecessors, which used global parameters. Suppose, for instance, that a character on-screen is wearing glasses, and when she turns her head, light reflects briefly off the lenses. That flash of light can significantly alter the global statistics of the image, and a global modification could overcompensate in the opposite direction. But with the researchers’ new algorithm, the character’s eyes are modified separately, so there’s less variation in the rest of the image from frame to frame.

Even local transfer, however, still failed to make modified photos look fully natural, Shih says. So the researchers added another feature to their algorithm, which they call “multiscale matching.”

“Human faces consist of textures of different scales,” Shih says. “You want the small scale — which corresponds to face pores and hairs — to be similar, but you also want the large scale to be similar — like nose, mouth, lighting.”

Of course, modifying a photo at one scale can undo modifications at another. So for each new image, the algorithm generates a representation called a Laplacian pyramid, which allows it to identify characteristics distinctive of different scales that tend to vary independently of each other. It then concentrates its modifications on those.

Future uses

The researchers found that copying stylistic features of the eyes in a sample portrait — characteristic patterns of light reflection, for instance — to those in the target image could result in apparent distortions of eye color, which some subjects found unappealing. So the prototype of their system offers the user the option of turning that feature off.

Shih says that the technique works best when the source and target images are well matched — and when they’re not, the results can be bizarre, like the superimposition of wrinkles on a child’s face. But in experiments involving 94 photos culled from the Flickr photo-sharing site, their algorithm yielded consistently good results.

“We’re looking at creating a consumer application utilizing the technology,” says Robert Bailey, now a senior innovator at Adobe’s Disruptive Innovation Group, who was previously director of design at Picasa and, after Picasa’s acquisition by Google, led the design of Picasa Web Albums. “One of the things we’re exploring is remixing of content.”

Bailey agrees that the researchers’ technique is an advance on conventional image filtration. “You can’t get stylizations that are this strong with those kinds of filters,” he says. “You can increase the contrast, you can make it look grungy, but you’re not going to fundamentally be able to change the lighting effect on the face.”

By contrast, the new technique “can be quite dramatic,” Bailey says. “You can take a photo that has relatively flat lighting and bring out portrait-style pro lighting on it and remap the highlights as well.”