The concept of turning data into music has been around for centuries. Ancient philosophers believed that proportions and patterns in the movements of the sun, moon, and planets created a celestial “Musica Universalis.” But that “music of the spheres,” as it’s known, was metaphorical. Now MIT researchers have invented a new platform, called Quantizer, which live-streams music driven by real-time data from research that explores the very beginnings of our universe.

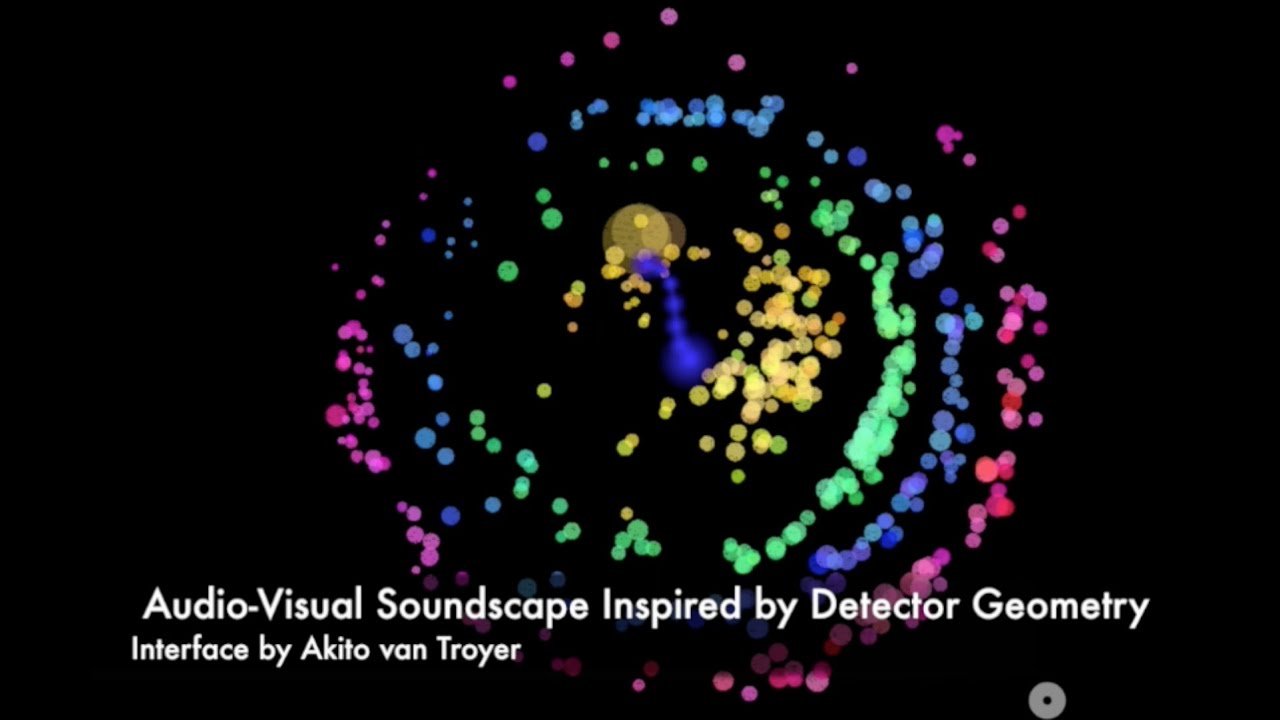

The ATLAS experiment has granted MIT Media Lab researchers unique access to a live feed of particle collisions detected at the Large Hadron Collider (LHC), the world’s largest particle accelerator, at CERN in Switzerland. A subset of the collision event data is routed to Quantizer, an MIT-built sonification engine that catches and converts the incoming raw data into sounds, notes, and rhythms in real-time.

“You can imagine that when these protons collide almost at light speed in the LHC’s massive tunnel, the detector ‘lights up’ and can help us understand what particles were created from the initial collisions,” says Juliana Cherston, a master’s student in the Responsive Environments group at the MIT Media Lab. Her group director and adviser is Joe Paradiso, who worked at CERN on early LHC detector designs and has long been interested in mapping physics data to music. Cherston conceived Quantizer and designed it in collaboration with Ewan Hill, a doctoral student from the University of Victoria in Canada.

Cherston says that “part of the platform’s job is to intelligently select which data to sonify — for example, by streaming only from the regions with highest detected energy.” She explains that Quantizer gets real-time information, such as energy and momentum, from different detector subsystems. “The data we use in our software relates, for example, to geometric information about where the energies are found and not found, as well as the trajectories of particles that are built up in the ATLAS detector’s innermost layer.”

Tapping into the geometry and energy of the collision event properties, the sonification engine scales and shifts the data to make sure the output is audible. In its default mode, Quantizer assigns that output to different musical scales, such as chromatic and pentatonic, and composers can build music or soundscapes. They can also map the data to sonic parameters that are not tied to specific musical scales or other conventions, allowing a freer audio interpretation. Via an audio stream, anyone can listen in near real-time via the platform’s website. So far, it includes music streams, which change according to the data feed, in three mappings related to genres that the creators term cosmic, suitar samba, and house. The team soon plans to include other compositions that map the data into additional musical pieces that could sound quite different. In principle, Quantizer behaves much like a reimagined radio in which people can “tune in” to ATLAS data interpreted via the aesthetics of different composers.

Quantizing the composer’s vision

Evan Lynch '16 MNG '16, a recent MIT graduate in physics and computer science, composed the cosmic stream on the site. A cellist who minored in music, Lynch collaborated on the project with Cherston at the Media Lab. “I imagined what an LHC collision is like and how the particles go through the different layers of the detector and came up with general vision of the sounds I wanted to represent that with. Then I worked with Juliana to pipeline the data that would drive that vision so that the specifics of the sound would be based on underlying reality. It is an artistic representation, but it’s based on the real thing.”

“The sonification engine allows composers to select the most interesting data to sonify,” says Cherston, “and provides both default tools for producing more structured audio as well as the option for composers to build their own synthesizers compatible with the processed data streams.” Composers can control some parameters, such as pitch, timbre, audio duration, and beat structures in line with their musical vision, but the music is essentially data-driven.

Quantizer is in its nascent stage, and Cherston says a crucial aspect of the project moving forward is working closely with composers with whom she shares Quantizer’s code base. Cherston says “there’s a lot of back-and-forth feedback that informs modifications and new features we add to the platform. My long-term goal would be to simplify and move some of the musical mapping controls to a website for broader access.”

Music unravels physics

Cherston, who worked on the ATLAS experiment when she was a Harvard University undergraduate, says that public outreach is the key reason its officials have granted MIT access to its experiment data for Quantizer. It’s not simply about turning data into music; it’s also about offering a novel way for everyone to connect with the data and experience its characteristics, Cherston says. “It shakes the mind to imagine that you could create aesthetically compelling audio from the same data that allows us to understand some of the most fundamental processes that take place in our universe.”

Paradiso, a former high-energy physicist and longtime musician, agrees. He says that Quantizer shows “how large sources of data emerging all over the world will become a ‘canvas’ for new art. Quantizer lets people connect to the ATLAS experiment in a totally different way. What we hope for is that people will hear and enjoy this; that they’ll relate to physics in a different way, then start to explore the science.”

Though musical interpretations of physics data have been made before, to the Quantizer team’s knowledge, this is the first platform that runs in real-time and implements a general framework that can be used to support different compositions. The Quantizer site attracted close to 30,000 visitors in its first month online, after Cherston and her collaborators released their paper at the 2016 Conference on Human Factors in Computing Systems (CHI) conference. They’ll next present the project in July at the annual New Interfaces in Musical Expression (NIME) global gathering.